Erhalten Sie Zugang zu diesem und mehr als 300000 Büchern ab EUR 5,99 monatlich.

- Herausgeber: Icon Books

- Kategorie: Geisteswissenschaft

- Sprache: Englisch

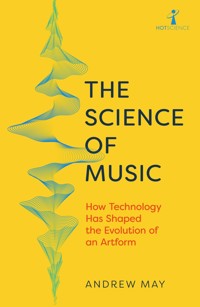

Music is shaped by the science of sound. How can music - an artform - have anything to do with science? Yet there are myriad ways in which the two are intertwined, from the basics of music theory and the design of instruments to hi-fi systems and how the brain processes music. Science writer Andrew May traces the surprising connections between science and music, from the theory of sound waves to the way musicians use mathematical algorithms to create music. The most obvious impact of science on music can be seen in the way electronic technology has revolutionised how we create, record and listen to music. Technology has also provided new insights into the effects that different music has on the brain, to the extent that some algorithms can now predict our reactions with uncanny accuracy, which raises a worrying question: how long will it be before AI can create music on a par with humans?

Sie lesen das E-Book in den Legimi-Apps auf:

Seitenzahl: 218

Veröffentlichungsjahr: 2023

Das E-Book (TTS) können Sie hören im Abo „Legimi Premium” in Legimi-Apps auf:

Ähnliche

Hot Science is a series exploring the cutting edge of science and technology. With topics from big data to rewilding, dark matter to gene editing, these are books for popular science readers who like to go that little bit deeper …

Available now and coming soon:

Destination Mars:The Story of Our Quest to Conquer the Red Planet

Big Data:How the Information Revolution is Transforming Our Lives

Gravitational Waves:How Einstein’s Spacetime Ripples Reveal the Secrets of the Universe

The Graphene Revolution:The Weird Science of the Ultrathin

CERN and the Higgs Boson:The Global Quest for the Building Blocks of Reality

Cosmic Impact:Understanding the Threat to Earth from Asteroids and Comets

Artificial Intelligence:Modern Magic or Dangerous Future?

Searching for Alien Life:The Science of Astrobiology

Dark Matter & Dark Energy:The Hidden 95% of the Universe

Outbreaks and Epidemics:Battling Infection from Measles to Coronavirus

Rewilding:The Radical New Science of Ecological Recovery

Hacking the Code of Life:How Gene Editing Will Rewrite Our Futures

Origins of the Universe:The Cosmic Microwave Background and the Search for Quantum Gravity

Behavioural Economics:Psychology, Neuroscience, and the Human Side of Economics

Quantum Computing:The Transformative Technology of the Qubit Revolution

The Space Business:From Hotels in Orbit to Mining the Moon – How Private Enterprise is Transforming Space

Game Theory:Understanding the Mathematics of Life

Hothouse Earth:An Inhabitant’s Guide

Nuclear Fusion:The Race to Build a Mini-Sun on Earth

Biomimetics:How Lessons From Nature Can Transform Technology

Eyes in the Sky:Space Telescopes from Hubble to Webb

Consciousness:How Our Brains Turn Matter Into Meaning

Hot Science series editor: Brian Clegg

Published in the UK and USA in 2023

by Icon Books Ltd, Omnibus Business Centre,

39–41 North Road, London N7 9DP

email: [email protected]

www.iconbooks.com

Sold in the UK, Europe and Asia

by Faber & Faber Ltd, Bloomsbury House,

74–77 Great Russell Street,

London WC1B 3DA or their agents

Distributed in the UK, Europe and Asia

by Grantham Book Services,

Trent Road, Grantham NG31 7XQ

Distributed in the USA

by Publishers Group West,

1700 Fourth Street, Berkeley, CA 94710

Distributed in Australia and New Zealand

by Allen & Unwin Pty Ltd,

PO Box 8500, 83 Alexander Street,

Crows Nest, NSW 2065

Distributed in South Africa

by Jonathan Ball, Office B4, The District,

41 Sir Lowry Road, Woodstock 7925

Distributed in India by Penguin Books India,

7th Floor, Infinity Tower – C, DLF Cyber City,

Gurgaon 122002, Haryana

Distributed in Canada by Publishers Group Canada,

76 Stafford Street, Unit 300,

Toronto, Ontario M6J 2S1

ISBN: 978-178578-991-5

eBOOK ISBN: 978-178578-990-8

Text copyright © 2023 Icon Books Ltd

The author has asserted his moral rights.

No part of this book may be reproduced in any form, or by any

means, without prior permission in writing from the publisher.

Typeset in Iowan by Marie Doherty

Printed and bound in Great Britain

by Clays Ltd, Elcograf S.p.A.

ABOUT THE AUTHOR

Andrew May is a freelance writer and former scientist, with a PhD in astrophysics. He is a frequent contributor to How It Works magazine and the Space.com website and has written five books in Icon’s Hot Science series: Destination Mars, Cosmic Impact, Astrobiology, The Space Business and The Science of Music. He lives in Somerset.

CONTENTS

1 Music and Technology

2 The Physics of Sound

3 Musical Algorithms

4 The Electronic Age

5 Music and the Brain

Further Resources

Chronological Playlist

MUSIC AND TECHNOLOGY

1

The idea that music and science have anything to do with each other would have struck most people as ridiculous 50 years ago. After all, music is an artform, and the arts and sciences are often seen as opposites – or at any rate, non-overlapping domains. Today, however, the gap between science and music may seem less of a gulf, thanks to the ubiquity of music and audio technology.

But technology is just the tip of the iceberg. It’s the thing we’re most aware of, but in fact, the relationship between science and music goes much deeper. One purpose of science is to pave the way to new technology, but it’s also about improving our understanding of the world we live in. And, of course, music is an integral part of that world. Science can help to show us how music works, both in terms of the way it is created and the way we hear and respond to it. As surprising as it may seem, much of music is really quite mathematical in nature, from its basic scales and rhythms to the complex ways that different chords relate to each other. Musicians don’t need a conscious understanding of these mathematical relationships – for most of them, it’s a matter of intuition – but it can be interesting to look at them all the same. If nothing else, it shows that the domains of art and science aren’t that far apart after all.

This studio, used by electronic music pioneer Karlheinz Stockhausen, resembles a scientific laboratory.

Wikimedia user McNitefly, CC-BY-SA-3.0

Some of the connections between science and music have become more visible through the use of technology, such as synthesisers and digital audio workstations (DAWs), but the fact is the connections have always been there. From a scientific perspective, all sounds, whether musical or otherwise, are vibrations in the air – or vibrations in any other medium, whether gas, liquid or solid. These vibrations spread out from the point of origin in the form of a wave, which is one of the most fundamental concepts in physics. Gravitational waves, radio waves, light rays, X-rays and even electron beams all propagate from A to B in the form of waves. In each case, something – some measurable quantity – is going up and down like a wave on the surface of the sea. In the case of a sound wave, the thing that goes up and down is pressure, so in contrast to an obviously undulating water wave, you have a moving pattern of compression and rarefaction. It’s that pattern which, when it enters our ears, our cleverly evolved hearing system interprets as sound.

So how is a sound wave created in the first place? All sorts of natural processes can do that – anything that causes a disturbance in the surrounding air, from raindrops to a thunderclap. And, of course, human vocal cords. That’s how music started – with simple a cappella singing – but to take it beyond that, we have to turn to technology. The Concise Oxford Dictionary defines technology as ‘the application of scientific knowledge for practical purposes’, so we’re not necessarily talking about electronics here. We’ll see in the next chapter how the ancient Greeks worked out how the design of a wind, string or percussion instrument determines the shape of the sound waves it produces. In that sense, even a drum, flute or lyre is ‘music technology’.

Another big impact that technology has had on music is the ability to record a performance and play it back later. Today, that’s inextricably linked to electronics, but it doesn’t have to be. The first practical phonograph, invented by Thomas Edison in 1877, was purely mechanical. An ear trumpet-like horn amplified incoming sounds sufficiently to vibrate a stylus, which made a copy of the audio waveform onto a wax cylinder that was rotated at a steady rate by a clockwork mechanism. Playing back the sound was literally the reverse of recording: the rotating cylinder made the stylus vibrate and the horn amplified the sound to the point that it was audible.

A simple contraption like this is never going to produce the kind of high-fidelity sound you could sit and listen to with pleasure, but it’s good enough to make some kind of permanent record of a performance. And that’s where we come to the real irony – or maybe even tragedy – of the situation. Edison’s phonograph was so simple it could have been made, if it had crossed anyone’s mind to do so, centuries earlier. So just think of all the musical performances that could have been recorded but weren’t. Both Bach and Beethoven were popular keyboard players as well as composers – and their real crowd-pleasers were virtuoso improvisations rather than written-out compositions. That, however, was in the days before phonographic recording, so all those performances are lost to history.

In fact, in the case of a keyboard player, such a recording doesn’t even require a phonograph. Sometime after Edison’s invention, someone belatedly worked out that, using a mechanism inside a specially modified piano, you can record a pianist’s performance on a roll of perforated paper and then play it back automatically as often as you like. The ‘player piano’, as it was called, was very popular between its invention in 1896 and the advent of good (or at any rate acceptable) quality phonograph recordings in the 1920s, after which it fell into obscurity. But maybe not completely so. If you’ve ever used a DAW, you’ll be familiar with its ‘piano roll’ editor – a name that harks back to the old perforated paper rolls of a player piano.

There’s a rather silly trend in classical music these days towards ‘authenticity’ – getting as close as possible to the notated score, which is presumed to represent the composer’s exact intention. But that might not be the case at all. When Chopin played one of his mazurkas – a Polish-style dance notated with three beats in a bar – for the conductor Charles Hallé, he played it with four beats in a bar. When Hallé pointed this out, Chopin explained that he was reflecting the ‘national character of the dance’. If that performance had been captured on a piano roll or wax cylinder, Chopin’s mazurkas might be played in a completely different way today.

As it happens, we do have a recording of one famous 19th-century composer playing the piano. Johannes Brahms died in 1897 – which happens to be the same year that a Cambridge professor named J.J. Thomson discovered the electron, so you know the quality of recordings made at this time are not going to be good. Made in 1889 using an Edison phonograph, it’s a strong contender for the worst recording of a great musician ever made. You can hear it in a YouTube video made by a talented young pianist calling himself ‘MusicJames’.* It’s well worth watching the whole thing; with the aid of some clever detective work, James disentangles exactly what Brahms is playing – and it’s not what any classical pianist today would expect.

The basic problem with the recording becomes apparent right at the start when Brahms speaks a few words in an unnaturally high-pitched voice. That’s because the wax cylinder only preserved higher frequency sounds, so that when he starts playing one of his Hungarian dances, you can only hear the notes his right hand plays, not the left. The trouble, from a musician’s point of view, is that what the right hand is playing doesn’t quite match the notated score. By interpolating what must be going on in the left hand, James concludes that Brahms is playing in what might well have been the standard pianistic style of his time, full of ‘rhythmic improvisation techniques which have gone extinct in classical music over the last century’.

Without technology, even in the crude form of an Edison phonograph, musical insights like this would be impossible. And without technology in the far more sophisticated form of digital workstations, electronic synthesisers and streaming services, a vast proportion of today’s music simply wouldn’t exist. In turn, the technology itself wouldn’t exist if someone – generally the developers rather than the users – did not have a strong grasp of the underpinning science.

As an example, consider the brilliant, genre-hopping musician Jacob Collier, who is still in his twenties as this book is being written. There’s no obvious science in his background or upbringing. His mother is a music teacher, violinist and conductor, and his grandfather was leader of the Bournemouth Symphony Orchestra. As a child, Collier sang the key role of Miles in three different productions of Benjamin Britten’s The Turn of the Screw, one of the greatest of all 20th-century operas. In 2021, his rap song ‘He Won’t Hold You’ won a Grammy award, and he narrowly missed getting Album of the Year too. Part of his popularity undoubtedly comes from a series of YouTube videos in which he shows exactly how he creates his music. And now we come to the point, because some of his explanations look more like science than art.

Like many musicians today, Collier puts his songs together almost entirely with the aid of software, in the form of a DAW. Apple users, whether they’re musicians or not, probably have some knowledge of how this works through playing around with the GarageBand DAW that comes free with Mac computers and iPads and iPhones (albeit in cut-down form). The DAW used by Collier is a more sophisticated Apple product called Logic Pro, but from a functional point of view it’s very similar to GarageBand, as are numerous other DAWs made by other companies. The user interface is oriented towards musicians, but the back end of the software is pure science – specifically, the science of acoustics and audio signal processing. This occasionally breaks through in Collier’s videos, when he uses distinctly scientific-sounding jargon like ‘waveform’ and ‘quantisation’. Albert Einstein would have understood those terms, but a musician of his era, such as Ravel or Rachmaninov, probably wouldn’t.

Another multi-talented young musician is Claire Boucher, who creates music under the name Grimes. Her music is even more intensely electronic than Collier’s. As she put it in a 2015 interview: ‘I spend all day looking at fucking graphs and EQs and doing really technical work.’ EQ, of course, means equalisation, and to many people it’s simply another piece of music jargon. But – as we’ll see in the chapter on electronic music – it really means changing the shape of an audio signal in the frequency domain. EQ has a lot more to do with physics than music theory.

The use of digital technology has transformed the whole sound of contemporary music. In the hands of a great artist/producer, the act of sampling snippets of existing works – anything from a well-known James Bond theme to obscure Eastern European rock music – has become an art form in itself. Through skilful manipulation of samples – looping, re-pitching, speeding up or slowing down and adding a host of subtle electronic effects – it’s possible to add whole new layers of emotional texture to a song in a way that would have been impossibly time-consuming a few decades ago.

It’s a similar story across all music genres. Classical composer Emily Howard has a university degree, not in music, but in mathematics and computer science – both subjects she frequently draws on in her work. In the world of electronic dance music, Richard D. James is best known by the alias of Aphex Twin, but one of his early albums was released under a different pseudonym, Polygon Window. Its title, Surfing on Sine Waves, is an electronic musician’s pun – yet there was a time, not so long ago, when no one but an engineer or scientist was likely to know what a sine wave is. When the ‘avant-pop’ artist Björk released her heavily electronic album Biophilia in 2011, it was launched in parallel with a mobile app that further explored the musical and thematic background of the songs. So, in numerous different ways, music and technology are becoming inseparable in the modern world.

Science has had an impact on the way people listen to music too. If you use a streaming service, you’re probably concerned about having sufficient bandwidth – another term that, until a few decades ago, was virtually unknown outside the sciences. Or maybe you use noise-cancelling headphones, which are entirely reliant on the physics of sound waves for their effectiveness. Even the very word ‘electronics’ – inextricably associated with music technology in many people’s minds – comes from the electron, one of the seventeen elementary particles of modern physics. Without a thorough understanding of its behaviour, the electronic technology most of us depend on for our music would simply be impossible.

All this technology is rooted, as the Oxford Dictionary says, in basic science. That’s not to say, of course, that musicians have to understand the science in order to exploit the technology; fortunately, it’s quite the opposite. While most scientists (like many people in all walks of life) are music lovers, not all musicians are science lovers.

That’s always been the case. In 1877, the British physicist Lord Rayleigh produced a 500-page textbook called The Theory of Sound, which became a classic of its kind in the scientific world. But not in the musical world. Towards the end of his life, the great composer Igor Stravinsky admitted: ‘I once tried to read Rayleigh’s Theory of Sound but was unable mathematically to follow its simplest explanations.’

If you find yourself sympathising with Stravinsky, you’ll be pleased to hear there isn’t much in the way of textbook physics or complicated mathematics in this book. I just want to highlight a few of the many respects in which music is more closely related to science than it might appear – in a way that I hope is both thought-provoking and entertaining. Here’s a taste of what’s in store:

Chapter 2 takes a closer look at sound – musical and otherwise – from a scientific point of view. This is where we really get to grips with the concept of sound as a wave or vibration and trace the history of this notion all the way from ancient Greece to the present day. It’s in this chapter that some of the sciencey-sounding music jargon (or musicky-sounding science jargon?) that I’ve already bandied about, such as sine waves and the frequency domain, get a proper explanation.

Chapter 3 is all about musical parameters and algorithms – but it hasn’t got anything to do with computers. Musicians have been playing with ‘parameters’ like the pitch and duration of notes and applying ‘algorithms’ – which ultimately are just collections of rules – to the way they string notes together since time immemorial. And by the middle of the 20th century, with composers like Arnold Schoenberg, John Cage and Karlheinz Stockhausen on the scene, musical algorithms started to take on a distinctly ‘scientific’ appearance.

To most people, if the term ‘scientific music’ means anything at all, it refers to modern electronic music – and that’s the subject of Chapter 4. This takes us all the way from the birth of electronics in the early 20th century, through the pioneering experiments of Cage, Stockhausen and others in the 1950s, to the ever-widening use of synthesisers through the 1960s, ’70s, ’80s and ’90s – before finally coming up to date in today’s world of DAWs, plugins and computer algorithms.

That might be the end of the book if we were just talking about music technology, but actually, there’s a whole other side to the science of music, and that’s the ‘brain science’ behind how we perceive music, and how musicians create it. This is a topic we look at in the final chapter, together with the intriguing question of whether, as computers get more and more powerful, they will ever be able to create their own music on a par with that of human musicians.

While writing the book, there were various points – particularly when discussing science-inspired composition methods – where I couldn’t resist trying my hand at it. With the caveat that I’m just a computer geek, not a musician, you can check out the results on a YouTube playlist I created, entitled ‘The Science of Music’.

*https://www.youtube.com/watch?v=XEDGPG8C5Y4

THE PHYSICS OF SOUND

2

In space, as everybody knows, no one can hear you scream. That’s because space is a vacuum, and a sound wave – which is basically a rapid sequence of pressure fluctuations – needs a material substance to travel through. It doesn’t have to be a particularly dense substance, though. The atmosphere of Mars, at ground level, is almost 100 times thinner than our own, but it’s still enough to carry sound waves. We know that for certain now, because NASA’s Perseverance rover, which landed on the red planet in February 2021, has a couple of microphones on board. NASA has a great web page where you can hear some of the sounds they’ve recorded, including gusts of Martian wind, the Ingenuity helicopter in flight and the trundling of the rover’s own wheels.*

The same web page also includes a selection of audio clips recorded on Earth processed to sound the way they would if they were played on Mars. Compared with the originals, these are fainter and more muffled-sounding, with higher-pitched sounds like the tweeting of birds being almost inaudible. That’s due to the different properties of the Martian and terrestrial atmospheres, which affect the way sounds propagate through them. All in all, though, it’s surprising – and maybe a little disappointing – just how ordinary the Martian sounds recorded by Perseverance are.

Coming back down to Earth, you may have noticed – if you’ve spent any time snorkelling in the sea, for example – that sound travels through water as well as air. In fact, it generally carries further underwater than through the atmosphere; as you drift above a reef, a jet ski half a kilometre away may sound like it’s about to crash into you. That’s why whales, like humans, use sound to communicate. Some whale songs can remain audible over hundreds or even thousands of kilometres.

In the human world, navies use underwater sound waves, in the form of sonar, to hunt for things like enemy submarines. This is a choice that’s forced on them by the laws of physics because radio waves don’t really work underwater – they’re attenuated to virtually nothing within a few metres – so the more obvious choice of radar is a non-starter. But pretty much everything that a radar can do above water – such as determining the distance, speed or even shape of a target – can be achieved equally well in the underwater domain by sonar. And the analogy between radio and sound waves is a useful one, because – from a scientific point of view, at least – a radio wave may be a more familiar concept to many people. We can use it to explore just how sound waves work in a bit more depth.

Measuring sound waves

Two common terms in the context of radio are ‘frequency’ and ‘wavelength’. FM radio stations, for example, generally identify themselves by the frequency they’re broadcasting on – X megahertz, say – while older stations would often specify a wavelength in metres. The two numbers aren’t independent – if you know one, you can work out the other. All radio signals travel through the Earth’s atmosphere at the speed of light, approximately 300 million metres per second. If you imagine a cyclic wave pattern travelling at that speed and repeating itself once every metre – having a wavelength of one metre, in other words – then it will whiz past you at a frequency of 300 million cycles per second. The technical shorthand for ‘cycles per second’ is hertz (Hz), so putting all that together, the frequency of the wave is 300 megahertz.

Sound waves work in much the same way, albeit travelling at a much slower speed. The speed of sound in a given medium depends on a variety of factors, including pressure, temperature and composition. Under normal conditions at the Earth’s surface, it’s around 340 metres per second, but on Mars it’s closer to 240 metres per second. On the other hand, the speed of sound in water is much faster – typically around 1,500 metres per second. So sound waves don’t always have the same one-to-one relationship between frequency and wavelength as radio. Yet sounds in different environments, such as underwater or on Mars, don’t really sound all that different to our ears. It turns out that the really important property of a sound wave is its frequency, which determines the rate at which our eardrums vibrate and hence the sound our brains hear. So, for most purposes, we might as well forget about the speed and wavelength of sound waves and just concentrate on their frequency.

Sound frequencies are generally much lower than the frequencies used by radio stations, ranging up to a few tens of kilohertz (kHz) rather than hundreds of megahertz (MHz). Technically, we could say that, for example, an audio broadcast has a ‘carrier frequency’ of 100 MHz, but a bandwidth – i.e. the amount of useful information it contains – of 50 kHz. It’s easy enough to see why this has to be the case. The radio wave can only carry an audio signal if its shape is varied – or ‘modulated’, to use the technical term – in sync with the sound wave that’s being transmitted, but obviously, this modulation can’t change the frequency of the radio signal very much because it has to stay within the channel width of a suitably tuned radio receiver.

The upshot is that the carrier frequency of the radio wave has to be much higher than the frequency of the modulating audio signal that it’s conveying. You may think I’m being terribly technical here, but the fact is you’ve already heard about the principle I’m talking about. It’s in the names of the two different types of radio broadcasting: amplitude modulation (AM) and frequency modulation (FM). Of the two, AM is the easiest to visualise, as shown in the diagram opposite. FM is harder to illustrate because it involves changing the frequency of the wave (affecting the left–right distance between peaks) rather than the amplitude (up–down distance between peaks).